- simple.ai by @dharmesh

- Posts

- OpenAI Just Released Open Models (this is a Very Big Deal)

OpenAI Just Released Open Models (this is a Very Big Deal)

A quick guide to run the models locally on your computer

Yesterday, OpenAI released GPT-OSS 20B and GPT-OSS 120B--their first open-weight models since GPT-2 back in 2019--and I've barely slept since.

I'm like a kid in a candy store! (I don't like candy, but I do love groundbreaking AI releases.)

Not only did I manage to get the 20B model running smoothly on my MacBook Pro, but I also have the massive 120B parameter version running locally as well.

While the 120B version requires powerful hardware to run (I'm using an M4 Max with 128GB of RAM), the 20B version is much more accessible for everyday developers and runs great on consumer hardware with just 16GB of memory.

So in today’s post, I’m breaking down:

Why this release is a massive shift for OpenAI and developers

How to get these models running on your own machine (it's easier than you think)

The pricing and speed advantages that developers should know

Why it’s an Important Moment in AI

After years of keeping their best models locked behind APIs, the launch of gpt-oss is probably OpenAI's biggest gift to developers yet.

Screenshot from OpenAI’s blog post

It’s significant in a few ways, but I’ll do my best to highlight it from each point of view:

For OpenAI: This is their first open-weight release since GPT-2 back in 2019. By making these models freely available under an Apache 2.0 license, they're changing their relationship with the developer community. Instead of just being an API provider, they're now contributing directly to the open-source AI ecosystem again.

For developers: The 120B model performs on par with o4-mini on reasoning benchmarks, while the 20B model achieves similar results to o3-mini. But here's the kicker--you can run them completely offline on your own hardware. No API costs, no rate limits, no internet dependency.

Technical breakthrough: Both models were trained using techniques from the o3 series and support three reasoning efforts (low, medium, high) just like the closed o-series models. Developers get full access to the chain-of-thought reasoning process that makes these models so capable.

Now, let me pause and reflect on just how remarkable this moment really is (not just the new GPT-OSS, but locally runnable models in general).

The entirety of the 120B parameter GPT-OSS model is about 65GB. Small enough to fit on a $15 128GB USB stick. That USB drive contains a model that can write prose and poetry, knows vast amounts about virtually every domain and discipline, and can reason through complex questions.

Yes, you need powerful hardware to run the model (I'm using an M4 Max with 128GB of RAM), but the fact that this level of intelligence can be reduced to a collection of numbers that fits in your pocket is mind-boggling.

How to Run GPT-OSS Locally (The Easy Way)

There are a few ways you can get GPT-OSS running locally, but the simplest method I've found is through Ollama, following this handy guide from OpenAI's Cookbook blog.

Here's the step-by-step process:

1. Pick your model:

GPT-OSS-20B: The smaller model, works with ≥16GB VRAM or unified memory. Can run on higher-end consumer GPUs or Apple Silicon Macs. (I'd recommend starting here for most people).

GPT-OSS-120B: The full-sized model, best with ≥60GB VRAM or unified memory. Built for multi-GPU setups or beefy workstations.

2. Quick setup:

You'll need to use your computer's command line (also called Terminal on Mac or Command Prompt on Windows--it's like a text-based way to give your computer instructions):

# Install Ollama (get it from ollama.com/download)

# Pull the model you want:

ollama pull gpt-oss:20b

# OR

ollama pull gpt-oss:120b3. Start chatting:

ollama run gpt-oss:20bThat's it! You now have a state-of-the-art AI model running locally on your computer.

4. Use the API:

The beautiful thing about Ollama is that it exposes a Chat Completions-compatible API. This is useful if you want to integrate the AI into your own applications, automate repetitive tasks, or build custom workflows:

from openai import OpenAI

client = OpenAI(

base_url="http://localhost:11434/v1", # Local Ollama API

api_key="ollama" # Dummy key

)

response = client.chat.completions.create(

model="gpt-oss:20b",

messages=[

{"role": "user", "content": "Explain quantum computing"}

]

)5. Function calling:

The models support tool calling as part of their chain-of-thought reasoning, so you can build agents that interact with external systems--all running locally.

This is powerful because it means your local AI can actually do things like check your calendar, manage files, or pull data from databases, not just conversations.

Economics: Speed, Cost, and Control

Outside of just running these models locally, things also get really interesting from a business perspective.

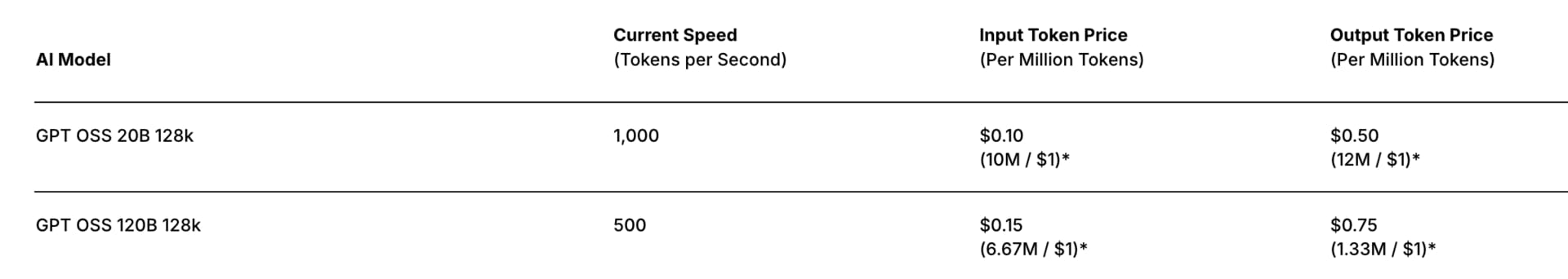

For example, just take a look at the API pricing:

GPT-OSS-120B: $0.15 per million input tokens / $0.75 per million output tokens

GPT-OSS-20B: $0.10 per million input tokens / $0.50 per million output tokens

I can't express enough how amazing it is that OpenAI just released an AI that performs better than GPT-4.1 for a fraction of the cost and with incredible speed. I’d encourage anyone doing serious AI development work to be experimenting.

For developers, this means:

More experimentation without worrying about API costs

Data privacy since nothing leaves your machine

Offline capability for apps that need to work without internet

Significantly more customization potential

Predictable costs for production applications

To reiterate, running the large model (gpt-oss-120b) locally does require serious hardware--at minimum 60GB of RAM and preferably dedicated GPUs. But for teams with the right infrastructure, the economics are compelling.

What I love about open-weight models is that they make it easier to build on the shoulders of giants. You get world-class AI capability as your foundation, then customize and optimize from there.

To me, it feels like the first time I did a View|Source on my browser to see how a particular web page or web app was constructed. It’s just really instructional to see what happens behind the scenes. More importantly, developers much smarter than I am get to peek inside the models and tweak them both for their own purposes and use cases, but also for the world at large. I think we’re going to see a lot of innovation on top of these new open models.

Closing Thoughts

The release is important because it's democratizing access to state-of-the-art capabilities in a way that gives developers way more control over their AI stack.

Open-source models that run locally have existed for a while, but this is the first time we're getting genuine OpenAI-level performance without any strings attached. No API dependencies, no usage limits, no gatekeepers.

What strikes me most is how this feels like the early days of the web… That sense that we're just beginning to understand what's now possible.

I encourage everyone to download at least the 20B model and experiment with it. The Ollama setup is straightforward enough for anyone to get running, and early experimentation with local AI will pay dividends as this technology evolves.

It’s time to build!

—Dharmesh (@dharmesh)

What'd you think of today's email?Click below to let me know. |