- simple.ai by @dharmesh

- Posts

- Context Engineering: Going Beyond Prompts To Push AI

Context Engineering: Going Beyond Prompts To Push AI

How to optimize what AI *thinks* about

If you've been around AI for any length of time, you've come across the idea of prompts (it's how you give instructions to an LLM like ChatGPT).

As it turns out, there are things you can do to better craft your prompts to improve the quality of results. That craft is known as "prompt engineering"--and it's exactly what it sounds like, engineering a prompt so the LLM does what you want.

But here's what's fascinating: as context windows have exploded from 4K to over 1M tokens in the last two years, something more powerful than prompt engineering has emerged: Context Engineering. (h/t to my friend Tobi from Shopify for coining this, I think)

The shift may seem simple but the framing is completely different--instead of optimizing how you ask, you're now optimizing what the AI has access to when it thinks.

So in today’s newsletter, I’m writing a more “instructional” post on:

How context windows work and why they're important

The evolution of prompt engineering to context engineering

Why this shift matters for anyone building with AI

What is a Context Window?

Think of it as a big sheet of paper that you pass to the LLM.

You can write a certain number of words on it. Those words can be "write me a limerick about pickleball." Or, they can be "Here's a 5,000-word essay, give me a 100-word summary" (and you include the whole essay on that big sheet of paper).

I thought this was funny (thanks GPT-4o)

Context windows have a limit, usually expressed as a number of "tokens." A token is roughly ¾ of an English word or about four characters. "ChatGPT" is two tokens: "Chat" and "GPT." This matters because billing, latency, and memory all scale with token count.

Here's a key limitation with LLMs: they only know what they were trained on and what's provided to them in the context window.

But as context windows have exploded in token size over the last 2 years, we've gotten increasingly clever about what we put in that context window, such as:

How AI "Remembers" Your Conversation

You may have noticed that ChatGPT and Claude have good short-term memory. That's why you can ask follow-up questions and they know what you're talking about. The way that happens? Behind the scenes, they're actually passing your prior prompts and the outputs into the context window. It's kind of like that movie Memento, where the main character forgets everything, and has to write notes on his body so he can remember who he is and what’s happening (great movie by the way).

RAG: Teaching AI on Demand

There's an approach called RAG (Retrieval-Augmented Generation) where we find a set of documents that are related to the user prompt, and then pass those into the context window (thereby "teaching" the LLM precisely the things that will help it answer a question or prompt).

Tool Calling: Extending AI's Capabilities

We've also introduced the idea of tool calling. It's a way to tell the LLM: "Hey, pretend like you have access to these set of tools for things like web search, stock price lookups, weather forecasts...whatever." LLMs can then use one or more of the tools provided if it will help them process a prompt.

Here’s a quirky but delightful detail on how tool calling works: When we let the LLM know what tools it has available, it doesn’t actually call the tools itself. The core LLM isn’t built to do that. It just takes context in and produces output. We tell it what tools it has access to, and the output, it lets the application (like ChatGPT) which tool it would like to invoke. The app then invokes the tool on the LLMs behalf and puts the results in the context window and sends it back. It’s a clever way to work around the fact that LLMs just work with a context window.

With a million-token context window, you can feed an AI an entire codebase, a complete business plan, or months of customer support conversations, and it can reason across all of that information simultaneously.

Why Context Engineering Matters

Remember the prompt engineering craze of 2023? Headlines were pushing $300k+ salaries for "Prompt Engineers" at places like Anthropic.

A lot of the jobs went viral because they required no formal CS degree -- just the ability to speak fluent "LLM."

Old screenshot of Anthropic’s Prompt Engineer job listing

While some prompt engineers were hired, it didn't end up being the massive job category many predicted. The reason? Everyone became a prompt engineer. And now, there's something even more important emerging: Context Engineering.

Context Engineering is essentially a higher-level version of Prompt Engineering.

An (overly) simple explanation: Prompt engineering was like learning to ask really good questions. Context engineering is like being a librarian who decides what books someone has access to before they even start reading.

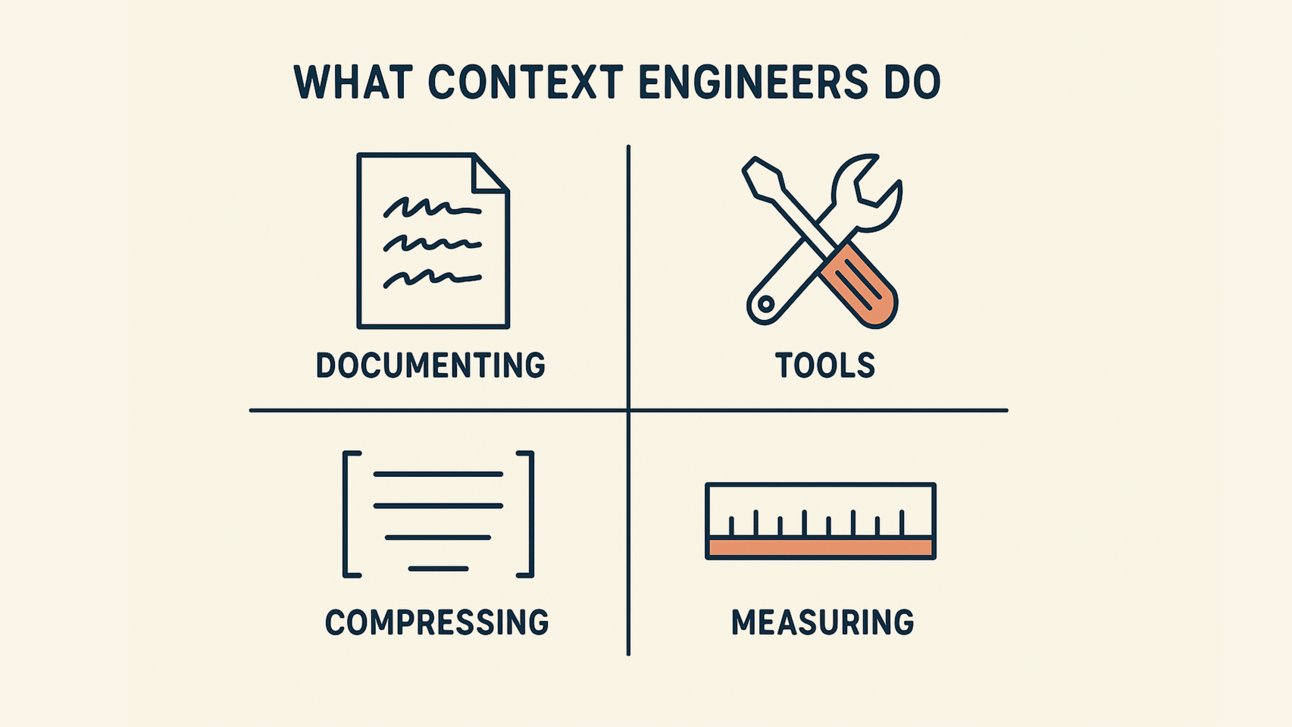

What Context Engineers Actually Do:

Curate: Decide which documents, memories, or APIs matter for each specific task

Structure: Layer system messages → tools → retrieved data → user prompt in optimal order

Compress: Summarize or chunk information to stay under token limits while preserving what matters

Evaluate: Measure accuracy and watch for "context dilution" where irrelevant info distracts the model

Keep in mind, more context means richer documents and longer conversations. But cost and latency rise roughly linearly with window length. This leaves a ton of room for Context Engineers to discover best practices (many of which are still emerging).

Context engineering requires thinking about information architecture, data strategy, and user experience in ways prompt engineering never did. The companies who master this will have a massive competitive advantage.

As I like to say: Prompting tells the model how to think, but context engineering gives the model the training and tools to get the job done.

The Context-First Future

The prompt engineering hype of 2023 taught us an important lesson: the most valuable AI skills aren't about learning secret phrases or clever tricks.

They're about understanding how to architect intelligent systems that have access to the right information at the right time.

It’s a fundamental shift from optimizing sentences to optimizing knowledge. This matters for anyone building with AI today.

Personally, as someone building in this space with agent.ai, I'm constantly amazed by what becomes possible when you stop thinking about AI as a chatbot and start thinking about it as a reasoning engine with access to the right context and tools.

The prompt engineering era taught us to talk to AI. The context engineering era is teaching us to think with AI.

And if you made it this far, I hope you found this "instructional-style" newsletter helpful. It's a bit different from my usual format, I'd love to know what you think in the poll below.

—Dharmesh (@dharmesh)

What'd you think of today's email?Click below to let me know. |